PSGD液压圆锥破碎机

2022-08-21T02:08:02+00:00

PSG圆锥破碎机 百度百科

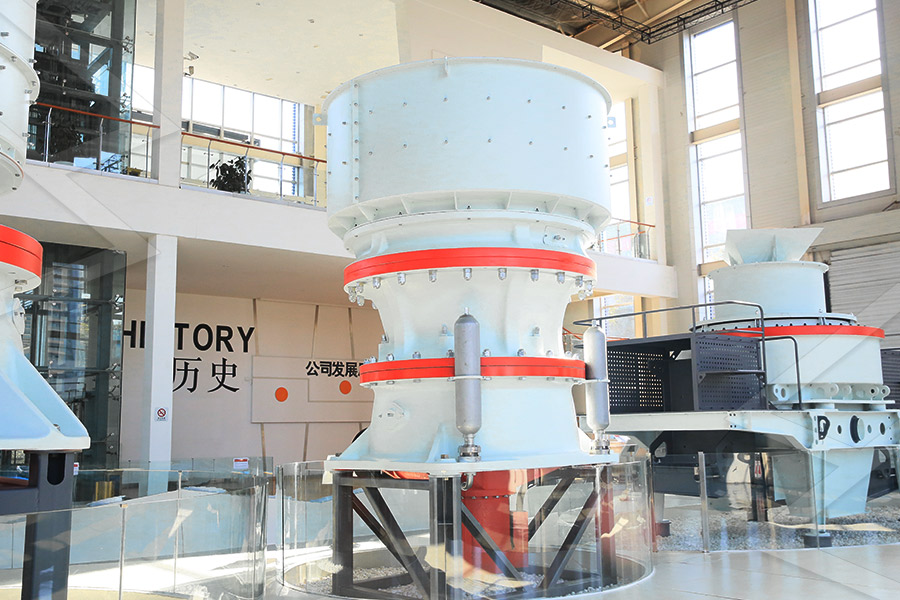

WebPSG圆锥破碎机广泛应用在 冶金工业 、建材工业、筑路工业、化学工业与硅酸盐工业中,适用于破碎中等和中等以上硬度的各种矿石和岩石。 本机具有 破碎 力大、效率高、处理 Web® GP300S™圆锥破碎有固定式和移动式机型。 联系我们的销售专家 优势 中碎圆锥破碎机 满足要求最为苛刻中碎应用的圆锥破碎机。 性能长期稳定 高生产率和优质最终 ® GP300S™圆锥破碎机 Outotec

全液压圆锥破碎机中德重工

Web全液压圆锥破碎机 HXGYS圆锥破碎机是我公司结合单缸液压圆锥破碎机与PSG圆锥破碎机,采用了先进的设计理念,经优化设计而成的新型圆锥破碎机。 进料粒度 ≤228mm 生 WebNov 7, 2022 PSGD (preconditioned stochastic gradient descent) is a general purpose secondorder optimization method PSGD differentiates itself from most existing methods lixilinx/psgdtorch Github

复合圆锥破碎机单缸圆锥破碎机冲击制砂机厂家上海冠亚路桥机

Web上海冠亚坚信唯有更专业的智能化技术检测才能生产出更好、更优的设备。 我们对生产的每台设备都要求精益求精,认真做好每一台机器。 "利居众后,责在人先"。 上海冠亚始 Webpsg圆锥破碎机的概述图册 //科学百科任务的词条所有提交,需要自动审核对其做忽略处理PSG圆锥破碎机图片百度百科

美国南加州大学骆沁毅:构建高性能的异构分布式训练算

Web这是一种在异构平台上进行高效机器学习分布式训练的方法,其特点是融合了目前的主流分布式训练算法AllReduce和前沿算法ADPSGD的优点,既能在同质环境下获得高性能,又 Web由于可以改变破碎腔型、偏心距和水平轴转速,以及不同的控制方法, ® GP300S™圆锥破碎机可以适应任何特定的生产要求。 偏心距调节特性可以轻松地调节破碎机的通过量,使其与其它设备相协调。 可调节偏心距还可使破碎机达到适当的挤满式给料条 ® GP300S™圆锥破碎机 Outotec

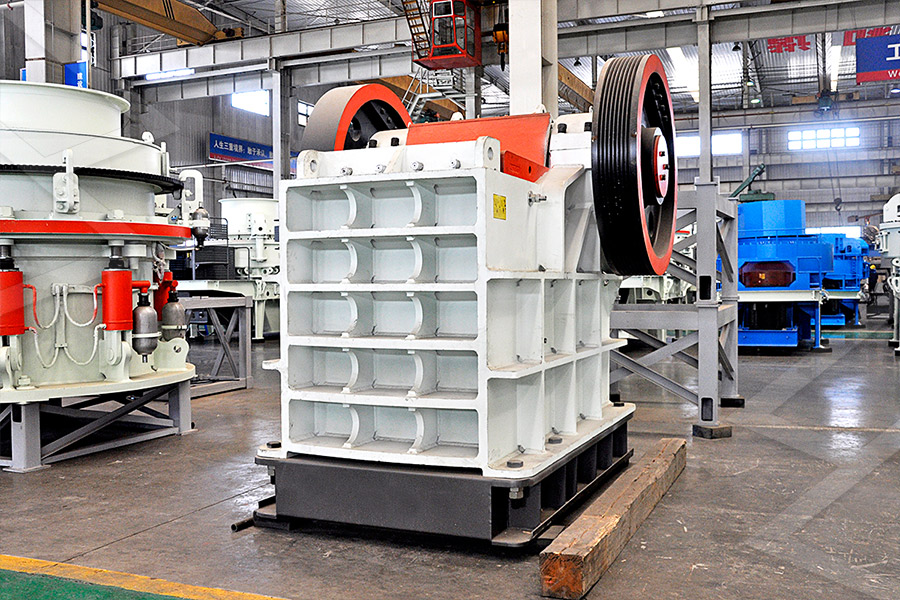

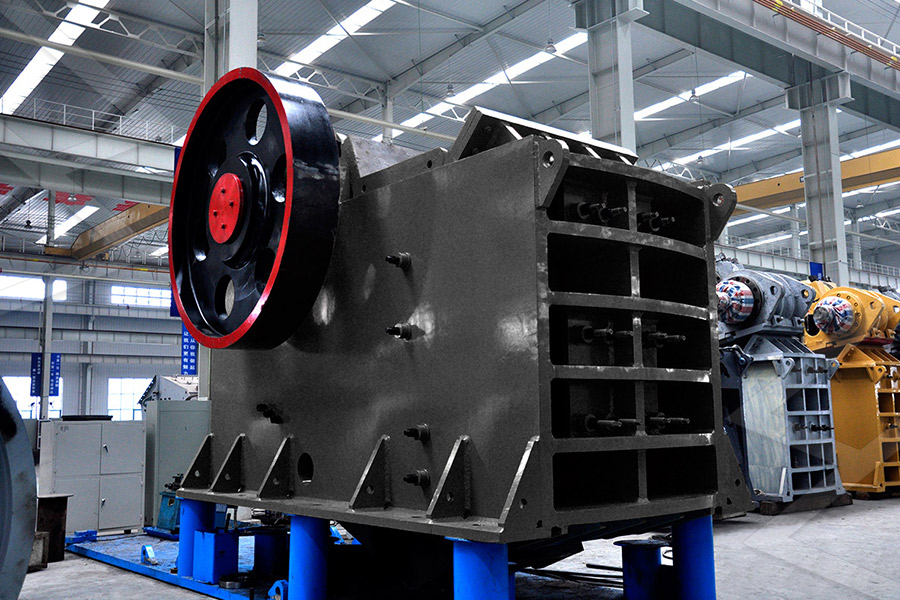

Symons cone crusher Henan Deya Machinery Co, Ltd

WebSep 19, 2018 PSGD2120: 2134: 1625: 172: 580744: 400: 89500: 4613×3302×4638: 4 Symons cone crusher advantages 1 Various types of crushing cavities for option The customers can choose the crushing cavity type with high crushing efficiency, uniform product size and suitable particle size according to production needs 2 The unique dustproof WebPSGD: Petroleum Structure and Geomechanics Division Education Education Home Courses Calendar Geosciences Technology Workshops (GTW) Lecturers In Person Training Online Training Research Conferences Visiting Geoscientist Program Foundation Foundation Home Student Grants Programs Resource Library Donate GEODC GEO AAPG > Publications > Online > and Discovery >

对模型进行DP处理(DPSGD+DPLogits)学渣渣渣渣渣的博客

WebApr 26, 2022 对模型进行DP处理(DPSGD+DPLogits) 讲述了如何在模型训练期间,通过修改损失函数,使训练后的模型具有一定抵御的DP的能力。 除此以外,还有一种在模型输出阶段加入噪声来实现差分隐私的方法:DPLogits,见论文:Sampling Attacks: Amplification of Membership Inference WebThe American Association of Petroleum Geologists is an international organization with over 38,000 members in 100plus countries The purposes of this Association are to advance the science of geologyAAPG > Events > Event Listings

[论文笔记] Projected Gradient Descent (PGD) 知乎 知乎专栏

WebJan 18, 2021 实验中的主要工具是投影梯度下降(PGD),因为它是大规模约束优化的标准方法。 令人惊讶的是,我们的实验表明,至少从一阶方法的角度来看,内部问题毕竟是可以解决的。 尽管在 xi + S 内有许多局部最大值分散分布,但它们的损失值往往非常集中。 这 WebJul 10, 2018 研究者在这篇论文中提出了一种异步去中心化并行随机梯度下降(adpsgd),能在异构环境中表现稳健且通信效率高并能维持最佳的收敛速率。 理论分析表明 ADPSGD 能以和 SGD 一样的最优速度收敛,并且能随工作器的数量线性提速。ICML 2018 腾讯AI Lab详解16篇入选论文 腾讯云开发者社区腾

[171006952] Asynchronous Decentralized Parallel Stochastic Gradient

WebOct 18, 2017 To the best of our knowledge, ADPSGD is the first asynchronous algorithm that achieves a similar epochwise convergence rate as AllReduceSGD, at an over 100GPU scale Subjects: Optimization and Control (mathOC); Machine Learning (csLG); Machine Learning (statML) Cite as: arXiv:171006952 [mathOC]WebFeb 12, 2022 BVRLPSGD enjoys secondorder optimality with nearly the same communication complexity as the best known one of BVRLSGD to find firstorder optimality Particularly, the communication complexity is better than nonlocal methods when the local datasets heterogeneity is smaller than the smoothness of the local loss In an Title: Escaping Saddle Points with BiasVariance Reduced Local

XiLin Li Preconditioned stochastic gradient descent (PSGD)

WebPSGD performs well on mathematical optimization benchmark problems, eg, the Rosenbrock function minimization one It can solve extremely challenging machine learning problems, eg, the delayedXOR sequences classification problem using either vanilla RNN or LSTM (S Hochreiter and J Schmidhuber: Long shortterm memory) WebAn overview PSGD (preconditioned stochastic gradient descent) is a general purpose secondorder optimization method PSGD differentiates itself from most existing methods by its inherent abilities of handling nonconvexity and gradient noises Please refer to the original paper for its designing ideaspsgdtorch/READMEmd at master lixilinx/psgdtorch GitHub

Decentralized Distributed Deep Learning with LowBandwidth

WebThe results show that RDPSGD can effectively save the time and bandwidth cost of distributed training and reduce the complexity of parameter selection compared with the TopKbased method The method proposed in this paper provides a new perspective for the study of onboard intelligent processing, especially for online learning on a smart WebMay 22, 2020 We propose the positionbased scaled gradient (PSG) that scales the gradient depending on the position of a weight vector to make it more compressionfriendly First, we theoretically show that applying PSG to the standard gradient descent (GD), which is called PSGD, is equivalent to the GD in the warped weight space, a space made by Positionbased Scaled Gradient for Model Quantization and

AAPG PSGD Webinar/QA: Qiqi Wang presents Fractures and YouTube

WebUnderstanding Fractures and Reservoir Quality in Tight Sandstones – Coupled Effects of Diagenesis and DeformationPresented by Qiqi WangDiscussion Topics:1) WebThe goals of the PSGD are to: • Increase the quantity and quality of specialty conferences, publications, and educational outreach for the petroleum structure and geomechanics disciplines within the AAPG • Provide greater disciplinespecific assistance to local organizing groups • Serve an important interest community within the general AAPG Petroleum Structure and Geomechanics Division LinkedIn

南加州大学钱学海:去中心化分布式训练系统的最新突破智源社区

WebJul 31, 2020 发表在ICML 2018上的ADPSGD [6] 是去中心化异步训练的一个代表算法。 它的核心思想是基于随机的通信,即每个Worker执行完一个Iteration以后需要通信,这个通信是随机的从邻居Worker中选择一个,而不是按照固定的通信图进行通信。 同时,要求通信的Worker对之间进行原子性的模型参数平均化操作。 图14:原子性操作的必要性 为什么 WebAn overview PSGD (preconditioned stochastic gradient descent) is a general purpose secondorder optimization method PSGD differentiates itself from most existing methods by its inherent abilities of handling nonconvexity and gradient noises Please refer to the original paper for its designing ideaspsgdtorch/READMEmd at master lixilinx/psgdtorch GitHub

Positionbased Scaled Gradient for Model Quantization and

WebMay 22, 2020 First, we theoretically show that applying PSG to the standard gradient descent (GD), which is called PSGD, is equivalent to the GD in the warped weight space, a space made by warping the original weight space WebAug 15, 2013 PSGD的Tensorflow实现 概述 PSGD(预处理随机梯度下降)是一种通用的二阶优化方法。PSGD通过其固有的处理非凸性和梯度噪声的能力使其与大多数现有方法有所不同。请参考的设计思想。 已存档。 此更新的实现适用于tf2x,并且还大大简化了Kronecker产品预处理器的使用。并行随机梯度下降算法 PSGDFeynmanMa7的博客CSDN博客

对模型进行DP处理(DPSGD+DPLogits)学渣渣渣渣渣的博客

WebApr 26, 2022 对模型进行DP处理(DPSGD+DPLogits) 讲述了如何在模型训练期间,通过修改损失函数,使训练后的模型具有一定抵御的DP的能力。 除此以外,还有一种在模型输出阶段加入噪声来实现差分隐私的方法:DPLogits,见论文:Sampling Attacks: Amplification of Membership Inference Web2 梯度下降法迭代步骤 梯度下降 的一个直观的解释: 比如我们在一座大山上的 某处位置 ,由于我们不知道怎么下山,于是决定 走一步算一步 ,也就是在每走到一个位置的时候,求解当前位置的梯度, 沿着梯度的负方向 ,也就是当前最陡峭的位置向下走一 梯度下降法(SGD)原理解析及其改进优化算法 知乎

XiLin Li Preconditioned stochastic gradient descent (PSGD)

WebPSGD performs well on mathematical optimization benchmark problems, eg, the Rosenbrock function minimization one It can solve extremely challenging machine learning problems, eg, the delayedXOR sequences classification problem using either vanilla RNN or LSTM (S Hochreiter and J Schmidhuber: Long shortterm memory) Webslower in heterogeneous settings ADPSGD, a newly proposed synchronization method which provides numerically fast convergence and heterogeneity tolerance, suffers from deadlock issues and high synchronization overhead Is it possible to get the best of both worlds — designing a distributed training method that has both high performancePrague: HighPerformance HeterogeneityAware

[论文笔记] Projected Gradient Descent (PGD) 知乎 知乎专栏

WebJan 18, 2021 实验中的主要工具是投影梯度下降(PGD),因为它是大规模约束优化的标准方法。 令人惊讶的是,我们的实验表明,至少从一阶方法的角度来看,内部问题毕竟是可以解决的。 尽管在 xi + S 内有许多局部最大值分散分布,但它们的损失值往往非常集中。 这 WebFeb 12, 2022 BVRLPSGD enjoys secondorder optimality with nearly the same communication complexity as the best known one of BVRLSGD to find firstorder optimality Particularly, the communication complexity is better than nonlocal methods when the local datasets heterogeneity is smaller than the smoothness of the local loss In an Title: Escaping Saddle Points with BiasVariance Reduced Local

Decentralized Distributed Deep Learning with LowBandwidth

WebThe results show that RDPSGD can effectively save the time and bandwidth cost of distributed training and reduce the complexity of parameter selection compared with the TopKbased method The method proposed in this paper provides a new perspective for the study of onboard intelligent processing, especially for online learning on a smart WebMay 21, 2021 Stochastic gradient descent (SGD) and projected stochastic gradient descent (PSGD) are scalable algorithms to compute model parameters in unconstrained and constrained optimization problems In comparison with SGD, PSGD forces its iterative values into the constrained parameter space via projection From a statistical point of [210510315] Online Statistical Inference for Parameters Estimation

Positionbased Scaled Gradient for Model Quantization and

WebIn this section, we describe the proposed positionbased scaled gradient descent (PSGD) method In PSGD, a scaling function regularizes the original weight to merge to one of the desired target points which performs well at both uncompressed and compressed domains This is equivalent to optimizing via SGD in the warped weight space